Abstract: Traditional interpolation algorithms often struggle with preserving fine details when scaling video images, especially at high resolutions. To address this issue, a multi-phase interpolation algorithm is employed for video image scaling. This paper elaborates on the working principle of the algorithm and its corresponding hardware architecture. The control logic of the system is implemented using Xilinx Spartan6 series FPGA chips. The system can upscale video signals from four cameras, regardless of the input channel, to a resolution of 1920x1080 @ 60 Hz. Experimental results demonstrate that the output video is real-time and maintains excellent detail quality, making it suitable for high-performance video processing applications.

0. Preface

Video image scaling, also known as resolution conversion or resampling, is a crucial technique in digital video processing. It directly impacts the visual quality and user experience of the output video. This technology is widely applied in fields such as medical imaging, engineering, multimedia, and video conferencing [1]. Traditional scaling methods include nearest neighbor, bilinear, and bicubic interpolation. other advanced techniques like edge detection, B-spline, and adaptive interpolation are also used. However, these conventional approaches may introduce aliasing artifacts, especially when dealing with high-resolution outputs, leading to loss of detail and poor visual quality.

The multi-phase interpolation algorithm has gained popularity due to its superior ability to preserve image details during scaling. It offers better performance compared to traditional methods and is extensively used in industrial applications. This paper explores the implementation of this algorithm on an FPGA-based hardware platform, aiming to improve real-time video processing capabilities while maintaining high-quality output.

1. Algorithm Principle

Video image scaling involves transforming an original image of resolution (M, N) into a target image of resolution (X, Y). Mathematically, this process can be described as mapping pixel values from the input image to the output image. For each pixel in the target image, Pixelout(x, y), the value is calculated based on the function f(i, j, Pixelin(i, j)), where i and j represent the coordinates of the original image, and x and y represent the coordinates of the target image.

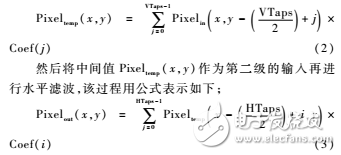

The essence of multi-phase interpolation lies in accurately determining the pixel values of the target image by analyzing the spatial relationship between pixels in the original image. This process typically involves a two-dimensional filtering operation, which can be expressed as:

Pixelout(x, y) = ∑HTaps−1i=0 ∑VTaps−1j=0 Pixelin(x − HTaps/2 + i, y − VTaps/2 + j) × Coef(i, j)

In this formula, HTaps and VTaps denote the number of taps in horizontal and vertical filters, respectively, while Coef(i, j) represents the weight coefficients of the filter. These coefficients determine how much influence each input pixel has on the output pixel, directly affecting the scaling result. Proper selection of these coefficients ensures effective low-pass filtering and anti-aliasing, resulting in a visually pleasing output.

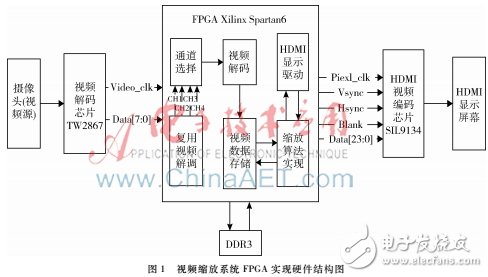

Figure 1: Hardware structure diagram of the video scaling system implemented on FPGA. The two-dimensional filtering process is often simplified by decomposing it into two one-dimensional filters—horizontal and vertical. This approach reduces computational complexity and improves real-time performance. The system first applies vertical filtering to generate intermediate pixel values, followed by horizontal filtering to produce the final scaled image. This method significantly reduces the number of multipliers required, making it ideal for hardware implementations with strict timing constraints.

By breaking down the two-dimensional problem into one-dimensional operations, the system becomes more efficient and easier to implement in hardware. This design is fundamental to many video scaling algorithms and enables flexible and scalable processing of video signals.

2. System Hardware Structure

The hardware architecture of the system is illustrated in Figure 1. It uses a Xilinx Spartan6 FPGA to implement the multi-phase interpolation algorithm. Four analog cameras provide the video input, which is then converted to digital format using the TW2867 multi-channel video decoder. The decoded data is sent to the FPGA for preprocessing. Due to differences in the refresh rates of the input and output video, the system stores the image data in DDR3 memory before performing scaling operations. After processing, the output data is encoded by the SIL9134 video encoder and displayed on a screen for evaluation.

2.1 Introduction to Related Chips

2.1.1 Video Decoding Chip

The TW2867 chip plays a critical role in converting analog video signals from the cameras into digital format. It supports multiple video standards and provides high-speed data transfer to the FPGA for further processing. This chip ensures that the input video is correctly formatted and ready for scaling operations.

AST Industry Co.,LTD , https://www.astsoundchip.com