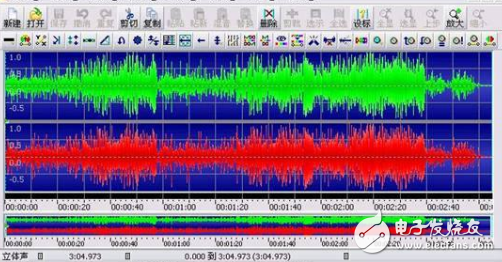

Sound is actually an energy wave, so it also has characteristics of frequency and amplitude. Frequency corresponds to the time axis, and amplitude corresponds to the level axis. We know that in the audible sound, the frequency band of the voice signal is between 80 Hz and 3400 Hz, and the frequency band of the music signal is between 20 Hz and 20 kHz. Speech (voice) and music are the objects that multimedia technology deals with. Generally, sound waves with a frequency of 20 Hz to 20 KHz that can be heard by the human ear are called audible sounds, those below 20 Hz become infrasound, and those above 20 KHz are ultrasound, and only the audible sound part is studied in multimedia technology.

One: Three main steps of digitizing audio

Because the analog sound is continuous in time, the sound signal collected by the microphone needs to be digitized before it can be processed by the computer. Usually we use PCM coding (pulse code modulation coding), that is, the three steps of sampling, quantization, and coding convert continuously changing analog signals into digital codes.

1. Sampling

Sampling is to read the amplitude of the sound at intervals. The number of samples per unit time is called the sampling frequency. Obviously, the higher the sampling frequency, the closer the data points of the discrete amplitude obtained to the continuous analog audio signal curve, and the greater the amount of data sampled.

In order to ensure that the digital audio can be accurately (reversibly) restored to analog audio for output, the sampling theorem requires that the sampling frequency must be greater than or equal to twice the highest frequency in the analog signal spectrum.

Common audio sampling rates are: 8kHz, 11.025kHz, 22.05kHz, 16kHz, 37.8kHz, 44.1kHz, 48kHz.

For example, if the frequency of the voice signal is in the range of 0.3 to 3.4 kHz, a sampling frequency (fs) of 8 kHz can be used to obtain a sampling signal that can replace the original continuous voice signal, and the general CD sampling frequency is 44.1 kHz.

2. Quantification

Quantization is to convert the amplitude of the sampled sound signal into a digital value, which is used to represent the signal strength.

Quantization accuracy: how many binary digits are used to represent each sampled value, also known as quantization digits. The quantization bit number of the sound signal is generally 4, 6, 8, 12 or 16 bits.

From the sampling frequency and quantization accuracy, we can know that relative to the natural signal, audio coding can only achieve infinite proximity at most. In computer applications, the highest fidelity level is PCM coding. Usually PCM conventions are lossless coding.

3. Coding

A sampling rate of 44.1kHz, quantization accuracy of 16bit, two-channel PCM encoding output, its data rate is 44.1K & TImes; 16 & TImes; 2 = 1411.2 Kbps, 176.4KB of space is required for one second of storage, about 1 minute It is 10.34M. Therefore, in order to reduce the cost of transmission or storage, it is necessary to encode and compress the digital audio signal.

So far, the digital rate of the compressed audio signal has been reduced to 32 to 256kbit / s, and the voice can be as low as 8kbit / s.

The purpose of compressing the encoding of digital audio information is to minimize the amount of digital audio information without affecting people's use. It is usually measured by the following 6 attributes:

—Bit rate;

— The bandwidth of the signal;

— Subjective / objective speech quality;

-delay;

-Computational complexity and memory requirements;

— Sensitivity to channel error codes;

In order for the encoded audio information to be widely used, a standard algorithm needs to be adopted when encoding the audio information. Traditional conference TV equipment mainly adopts G.711, G.722, G.728 and AAC_LD audio standards recommended by ITU-T.

In the introduction of audio equipment, you will often see that there are various supported audio formats and protocols in the product information. Let us explain in detail the common audio protocols of Bluetooth headsets and conference TVs.

Editor's recommendation: What are the relevant international standards in the audio and video fields

What are the common audio protocols of Bluetooth headsets

HFP: HFP (Hands-free Profile) allows Bluetooth devices to control calls, such as answering, hanging up, rejecting, and voice dialing. Rejecting and voice dialing depends on whether the Bluetooth headset and phone support it. Car kits in cars are a common usage scenario. The car kit will be connected to the phone and used to make and receive calls. It is more common to use a headset to make and receive calls after connecting a wireless headset to a mobile phone.

HSP: Headset mode (HSP) is used to support the use of hands-free mode (HFP) between Bluetooth headsets and mobile phones. It is commonly used for hands-free communication between cars and mobile phones in cars

A2DP: A2DP full name is Advanced Audio DistribuTIon Profile Bluetooth audio transmission model agreement! A2DP can use the chip in the headset to stack data to achieve high-definition sound. However, the headset that does not support A2DP is Auntie Lan ’s stereo headset. The basic requirement for stereo implementation is dual channels, so mono Bluetooth headsets cannot achieve stereo. The sound can reach 44.1kHz, and general headphones can only reach 8kHz. If the mobile phone supports Bluetooth, as long as the A2DP protocol is loaded, the A2DP headset can be used. Some consumers also see that the technical parameters mention Bluetooth V1.0 V1.1 V1.2 V2.0-these refer to the technical version of Bluetooth and refer to the transmission speed through Bluetooth. Whether they support A2DP depends on the Bluetooth product Whether the manufacturer uses this technology.

AVRCP: AVRCP is designed to provide standard interfaces for controlling TVs, Hi-Fi devices, etc. This configuration file is used to permit a single remote control device (or other device) to control all A / V devices that users can access. It can be used with A2DP or VDP. AVRCP defines how to control the characteristics of streaming media. Including pause, stop, start playback, volume control and other types of remote control operations. AVRCP defines two roles, namely controller and target device.

APTX: AptX is a compression technology, like ALAC, which supports lossless itself, but when used in Bluetooth technology, it is still lossy due to various reasons, but it is only a loss with a higher bit rate.

OPP

The Bluetooth communication program part needs to be used to transfer data between devices. OPP Profile: Object Push Profile is divided into OPPC (client) end and OPPS (server) end profile due to OPP profile. The difference between these two profiles is that only the client end The process of initiating data transmission, but in the scenario where the accessory device communicates with the mobile phone, both the mobile phone initiates the data transmission request and the device side initiates the transmission request. Therefore, two profiles, OPPC and OPPS, must be implemented in the device.

PBAP

Phonebook Access Profile

What are the common audio protocols for conference TV

1. ITU-T G.728

In 1992, ITU-T issued the recommended standard for the encoding method of telephone voice signals. Adopt LD-CELP coding mode, the sampling rate is 8KHz, and the sound signal is transmitted at the speed of 16kb / sec. The transmission delay time is extremely short, and the algorithm coding delay is only 0.625 ms.

2. ITU-T G.711

The standard was published in 1972, and its speech signal encoding is non-uniformly quantized PCM. The sampling rate of speech is 8KHz, each sample value is quantized by 8bit, and the output data rate is 64kbps. This narrowband encoding supports compression of audio from 300 to 3,400 Hz. But although the compression quality is good, it consumes relatively large bandwidth and is mainly used for digital phones on digital PBX / ISDN.

3. ITU-T G.722

The ITU-T G.722 standard is the first standardized wideband speech coding algorithm for 16 KHZ sampling rate. It was defined as a standard by CCITT in 1984 and is still in use today. The .G.722 codec receives 16-bit data (bandwidth from 50 Hz to 7 kHz) at 16 kHz and compresses it to 64, 56, and 48 Kbit / s, with a total delay of about 3 ms, which provides more Good call quality.

The advantage of G.722 is that the delay and transmission bit error rate are very low, and there is no patented technology, and the cost is low. Therefore, G.722 is widely used in wireless communication systems, VoIP manufacturers, personal communication services, and video conference applications.

4. G.722.1

G.722.1 is based on Polycom ’s third-generation Siren 7 compression technology and was approved by the ITU-T as the G.722.1 standard in 1999. G.722.1 adopts 16 KHZ sampling frequency, 16-bit data quantization, supports audio sampling from 50 Hz to 7 kHz frequency range, and compresses it to 32 and 24 Kbit / s. It uses 20 ms frame closure, providing an algorithm delay of 40 ms.

G722.1 can achieve a lower bit rate and greater compression than the G.722 codec. The goal is to achieve a quality roughly equivalent to G.722 at about half the bit rate. This code license requires the authorization of Polycom.

5. G722.1 Annex C

G722.1 Annex C is based on Polycom ’s Siren 14 compression technology, uses a 32kHz sampling frequency, supports audio sampling from 50 Hz to 14 kHz, and compresses it to 24, 32, or 48 kbps. Adopt 20ms frame closing, providing 40ms operation delay.

In mid-2005, the International Telecommunication Union (ITU) approved Polycom Siren 14 â„¢ technology as a new standard for 14 kHz ultra-wideband audio coding. Also enter G.722.1 Annex C as ITU-T recommendation. G722.1 Annex C has the advantages of low computing power and low bandwidth. Suitable for processing voice, music and natural sounds.

6. AAC-LD

AAC (Advanced Audio Coding) is an audio compression format jointly developed by the Fraunhofer Research Institute (the creator of the MP3 format), Dolby (DOLBY) laboratory and AT & T (American Telephone and Telegraph), which is MPEG -2 Part of the specification and became an international standard in March 1997. With the formation of the MPEG-4 standard in 2000, MPEG2 AAC was also used as the core encoding technology, and some new encoding features were added, also known as MPEG-4 AAC.

There are currently nine encoding specifications in the MPEG-4 AAC family. AAC-LD (Low Delay) is used for encoding at low bit rates. It supports 8K ~ 48K sampling rate, can output audio close to CD quality at a code rate of 64Kbps, and supports multiple sound channels. The AAC-LD algorithm delay is only 20ms.

AAC is more powerful because of its modular design. Its own frame structure can be filled with constant new things, which makes it possible for the cores of different development aspects to merge with each other and absorb the essence of each other.

7. Comparison of main parameters of various audio protocols:

Sampling frequency support audio bandwidth output code rate minimum algorithm delay

G711 8KHz 300 Hz ~ 3, 400 Hz 64 Kbps "1ms

G722 16kHz 50 Hz ~ 7 kHz 64 Kbps 3ms

G722.1 16kHz 50 Hz ~ 7 kHz 24, 32 Kbps 40ms

G722.1 C 32kHz 50 Hz ~ 14 kHz 24, 32, 48Kbps 40ms

AAC-LD 48kHz 20 Hzï¼20kHz 48 ~ 64 Kbps 20ms

3. Comparison of advantages and disadvantages of AAC_LD and G722. Annex C:

G722.1 C AAC_LD

The sampling audio frequency range supports 50 Hz to 14 kHz, which is close to CD sound quality, but the high frequency part is lost.

Supports 20 Hz-20 kHz full-band sampling, audio is closer to CD quality.

The output code rate is 24, 32, 48Kbps, the bandwidth is lower than AAC-LD, but at the expense of high frequency.

48 ~ 64 Kbps, and supports output greater than 64Kbps, which provides the possibility of better audio quality.

Algorithm complexity The algorithm complexity is low, the CPU usage is slightly better than the AAC-LD modular design, the function is more powerful, and it is supported by dedicated chips such as TI

The lowest delay adopts 20ms frame closing, 40ms algorithm delay 20ms algorithm delay, better than G722.1 C

Multi-channel can support dual-channel AAC, support up to 48 audio tracks, 15 low-frequency audio tracks

The standard generality G722.1-C is formulated and developed by Polycom, and its use requires Polycom authorization. Currently, only Polycom and very few videoconferencing manufacturers use it. As the core standard of MPEG4, it is supported by Apple, Nokia, Panasonic, etc., and adopted by many videoconferencing manufacturers such as TANDBERG, and has a broader application prospect.

At the same sampling frequency, AAC-LD can provide better sound quality than G722.1 C, MP3, etc. AAC-LD realizes the shortest delay in ultra-wideband audio coding, and guarantees the sound quality close to CD. It achieves the best combination of sound quality, bit rate and delay, which is the best choice in the field of conference TV.

Editor's choice: ADI talks about audio system standards and protocols

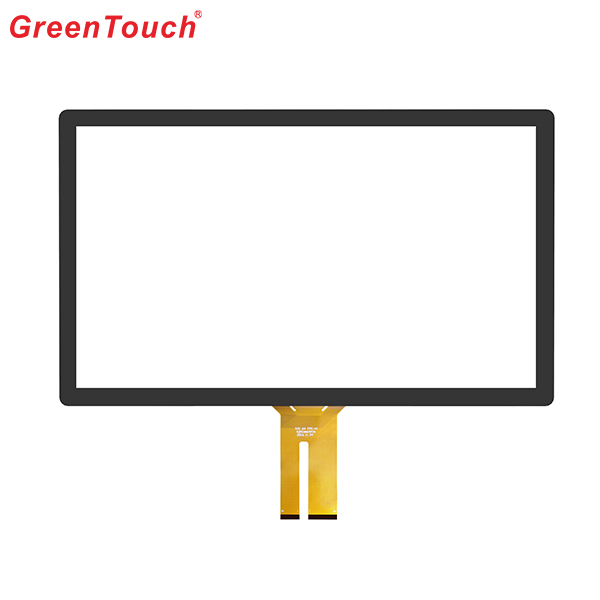

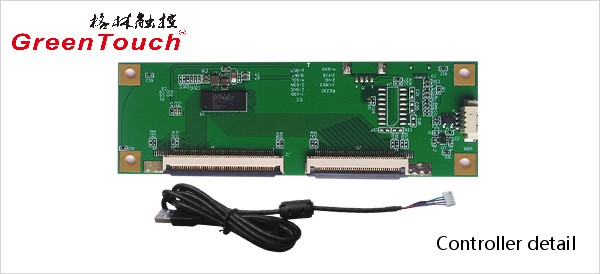

This product response time is shorter than resistive tonch screen's with a good touch.It is widely used to Phone,Tablet,E-book,gaming device by hands holding,Touch Monitor,GPS,All-In-One and so on.GreenTouch projected capacitive touch technology is with fast and sensitive response, the design with frame (without frame is optional) makes it looks professional and generous. The model is designed for high-intensity and high-frequency business environments.

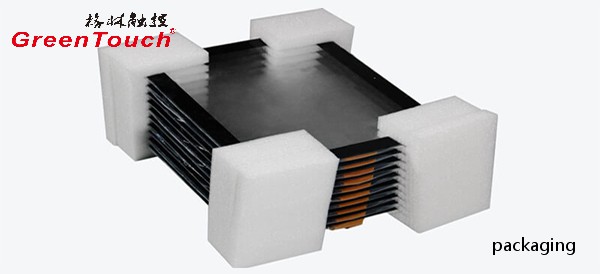

Product show:

Capacitive Touch Screen Panel,Android Touch Screen,Portable Touch Screen,Pcap Touch Screen,POS Terminal Touch Screen,Mini Capacitive Touch Screen

ShenZhen GreenTouch Technology Co.,Ltd , https://www.bbstouch.com